AI use in the healthcare sector is expanding at an unheard-of rate, with applications spanning diagnosis, drug development, robotic surgery, and predictive analytics.

However, AI presents substantial ethical issues that must be resolved even if it can change healthcare and enhance patient outcomes.

The Ethical Implications of AI Use in Healthcare

1. Privacy and Data Security

One of the primary ethical concerns with the use of AI in healthcare is privacy and data security.

AI in healthcare generates vast amounts of data, including sensitive patient information, such as medical history, genetic information, and biometric data.

Also Read- The future of space exploration: the role of AI and robotics

This data must be stored securely and protected from unauthorized access or misuse. AI systems are only good as the data they are trained on, and the quality of the data is critical to the accuracy and effectiveness of the AI.

As a result, there is a risk that AI systems could be trained on partial or incomplete data, leading to inaccurate or discriminatory effects.

For example, suppose an AI system is trained on a biased dataset that disproportionately represents a particular race or ethnicity. In that case, the system may produce partial results that unfairly disadvantage certain groups.

2. Informed Consent

Informed consent is a fundamental principle of medical ethics that requires patients to be fully informed about the risks and benefits of any medical intervention before giving their consent.

With the use of AI in healthcare, patients may need help understanding the implications of using AI in their treatment or the extent to which their data is being used.

Patients have the right to know how their data is being used, who has access to it, and what algorithms are used to analyze it.

AI’s use in healthcare also raises questions about how much decision-making power should be given to AI systems versus human healthcare providers.

Patients must be adequately educated about the role that artificial intelligence will play in their care if they are to have the right to make informed healthcare decisions.

3. Bias and Discrimination

AI systems can only be good as the data on which they are trained. As a result, there is a risk that AI systems will be trained on adequate or correct data, resulting in inaccurate or prejudiced outputs.

For example, suppose an AI system is trained on a biased dataset that disproportionately represents a particular race or ethnicity. In that case, the system may produce little results that unfairly disadvantage certain groups.

In healthcare, bias, and discrimination can have serious consequences, leading to incorrect diagnoses, ineffective treatments, and disparities in health outcomes.

It is essential to ensure that AI systems are trained on diverse and representative datasets and that the algorithms used to analyze the data are transparent and auditable.

4. Accountability and Responsibility

Another ethical implication of using AI in healthcare is accountability and responsibility. With the increasing use of AI in healthcare, it can be challenging to determine who is responsible for the actions of AI systems.

Who is accountable for the outcome when an AI system makes a medical decision? Is it the healthcare provider, the AI developer, or the patient?

It is essential to establish clear lines of accountability and responsibility for the actions of AI systems in healthcare.

Healthcare providers must be trained to understand AI’s limitations and take responsibility for the decisions they make based on the recommendations of AI systems.

Developers of AI systems must also be held accountable for the accuracy and effectiveness of their systems.

5. Transparency and Explainability

Transparency and explainability are critical ethical considerations when using AI in healthcare. Patients have the right to know how their data is being used, who has access to it, and what algorithms are used to analyze it.

AI systems used in healthcare must be transparent and explainable to patients and healthcare providers.

Transparency and explainability also help to build trust between patients and healthcare providers.

Patients need to have confidence in the accuracy and reliability of AI systems used in their care, and transparency and explainability can help to ensure this.

Healthcare providers also need to understand how AI systems make their recommendations, so they can evaluate the results and make informed decisions about patient care.

6. Regulation and Governance

AI in healthcare raises essential questions about regulation and governance. AI systems used in healthcare must be subject to the same regulatory standards as other medical devices and treatments.

In addition, the regulatory framework must ensure that AI systems used in healthcare are safe, effective, and accurate.

Regulation and governance are also necessary to ensure that AI systems used in healthcare are ethical and aligned with the values and principles of the medical profession.

The development and use of AI in healthcare must be guided by ethical principles such as transparency, fairness, and accountability.

This requires a multidisciplinary approach involving healthcare providers, AI developers, ethicists, policymakers, and patient advocates.

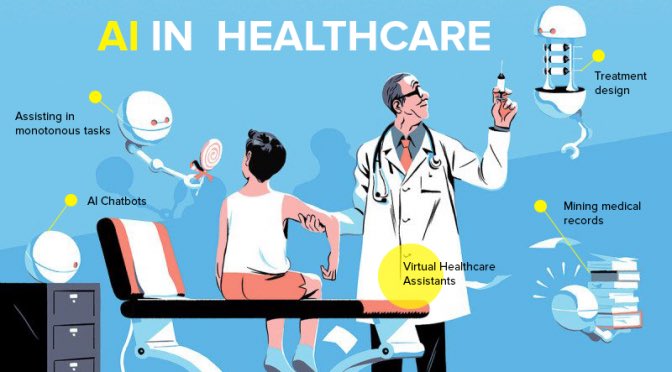

The Role of AI in Healthcare

Healthcare is one of many areas of the world that artificial intelligence (AI) is impacting.

Despite the ethical challenges of using AI in healthcare, there is no denying the potential benefits that AI can bring to the healthcare industry. AI can improve patient outcomes, reduce healthcare costs, and increase efficiency and productivity.

AI can identify patterns in patient data are not visible to the human eye, leading to more accurate diagnoses and effective treatments.

AI can also predict patient outcomes, helping healthcare providers intervene early and prevent complications.

Furthermore, AI can automate tedious processes, allowing healthcare personnel to focus on more complicated tasks requiring human experience.

Conclusion

Conclusively, AI in healthcare raises critical ethical questions that must be attended to ensure that AI is used ethically and responsibly.

These ethical implications include privacy and data security, informed consent, bias and discrimination, accountability and responsibility, transparency and explainability, regulation, and governance.

It is essential to involve all stakeholders in developing and deploying AI systems.

This helps maximize the benefits of AI in healthcare while minimizing the ethical risks.

This includes healthcare providers, AI developers, ethicists, policymakers, and patient advocates. By working together, we can ensure that AI is used in healthcare safely, effectively, and aligned with the values and principles of the medical profession.